Robot ethics, sometimes referred to as “roboethics”, concerns moral issues that arise when robots are used, for example, regardless of whether robots represent a risk to people in the long or short run, whether a few employments of robots are dangerous (in social insurance or as ‘executioner robots’ in war), and how robots ought to be structured, for example, they act ‘morally’ (this last concern is additionally called machine morals). Roboethics is a sub-field of morals of innovation (explicitly, ‘data innovation’) and it has close connects to legitimacy just as financial concerns. Specialists from different regions are starting to handle moral inquiries regarding making mechanical innovation and actualizing it in social orders, such that will at present guarantee the wellbeing of humanity.

While the issues are as old as the word ‘robot’ itself, genuine scholarly talks began around the year 2000. Roboethics requires the consolidated responsibility of specialists of a few orders, who need to change laws and guidelines to the issues coming about because of the logical and mechanical accomplishments in Robotics and AI. The fundamental fields engaged with robot morals are: apply autonomy, software engineering, man-made reasoning, theory, morals, religious philosophy, science, physiology, intellectual science, neurosciences, law, humanism, brain research, and modern plan.

History of Robot Ethics:

The word ‘robot’ is derived from the Czech language word ‘robota’ meaning ‘forced labour’. The term was coined in K. Capek’s play R.U.R., ‘Rossum’s Universal Robots’ (1920).

Since long, the talk of morals in connection with the treatment of non-human and even non-living things and their potential “otherworldliness” have been examined. With the advancement of technology and, in the long run, robots, this way of thinking was likewise connected to mechanical technology. The primary production straightforwardly tending to and setting the establishment for robot morals was ‘Runaround’, a sci-fi short story written by Isaac Asimov in 1942 which included his notable ‘Three Laws of Robotics’. These three laws were persistently adjusted by Asimov, and a fourth, or zeroth law, was in the long run added to go before the initial three with regards to his sci-fi works. For the present, “roboethics” was most likely instituted by Gianmarco Veruggio.

A momentous occasion that pushed the worry of roboethics was the First International Symposium on Roboethics in 2004 by the community-oriented exertion of Scuola di Robotica, the Arts Lab of Scuola Superiore Sant’Anna, Pisa, and the Theological Institute of Pontificia Accademia della Santa Croce, Rome. “Following two days of frantic discussion, anthropologist Daniela Cerqui recognized three primary moral positions arose out of two days of serious discussion:

- The individuals who are not intrigued by morals believe their activities are carefully specialized and don’t think they have a social or ethical obligation in their work.

- Individuals who are keen on transient moral inquiries. As per this profile, questions are communicated as far as “great” or “awful,” and allude to some social aspects. For example, they feel that robots need to stick to social shows. This will incorporate “regard” and helping people in different territories, for example, actualizing laws or in helping older individuals. (Such contemplations are significant, however, we need to recall that the qualities used to characterize the “awful” and the “great” are relative. They are the contemporary estimations of the industrialized nations).

3. The individuals who think as far as long haul moral inquiries about, for instance, the “Advanced divide” among South and North, or youthful and old. They know about the divide between the industrialized and poor nations and wonder whether the former ought to change their method for creating mechanical technology such as to be progressively valuable to the South. They don’t figure out expressly the inquiry what for, however, we can think about what it is understood”.

Human factors in Roboethics

It is unavoidable that robots will turn out to be a piece of our regular daily existence. Advances in wellbeing and execution innovations imply that robots are being intended for a wide range of purposes at home, at work, in instruction, in movement frameworks, in wellbeing and social consideration – possibly in any setting. This advancement will be transformational, including progressively nearer communications with individuals. In any case, as innovation normally grows quicker than we assemble a solid comprehension of its results, it is crucial that we quickly develop the assortment of information we have to make great principles for sheltered and moral future human-robot collaborations (HRI). Brain research and Human Factors offer the logical methodologies that will empower us to build up this significant data.

Roboethics in fiction

Roboethics as a science, or philosophical point, has not made any solid social impact, yet it is a typical subject in sci-fi novels and movies. One of the most prevalent movies delineating the potential abuse of mechanical and AI innovation is The Matrix, portraying a future where the absence of roboethics realized the pulverization of mankind. An animated movie based on The Matrix, titled ‘Animatrix’, concentrated intensely on the potential moral issues between people and robots. A significant number of the Animatrix’s vivified shorts are additionally named after Isaac Asimov’s anecdotal stories.

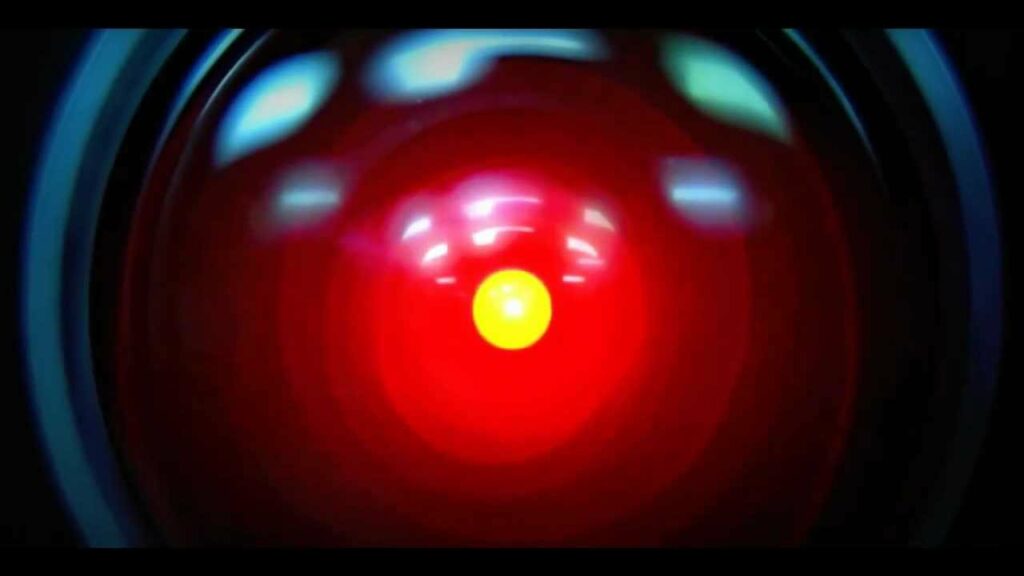

Not a piece of roboethics, in essence, the moral conduct of robots themselves, has been a joining issue in roboethics in pop culture. The Terminator arrangement centers around robots kept running by an uncontrolled AI program with no restriction on the end of its foes. This arrangement also has a similar cutting edge plot as The Matrix arrangement, where robots have taken control. The most celebrated instance of robots or PCs without customized morals is HAL 9000 in the Space Odyssey 2001 arrangement, where HAL (a PC with development AI abilities who screens and helps people on a space station) slaughters every one of the people on board to guarantee the achievement of the doled out mission after his very own life is compromised.

Also Read: Transhuman: Advances in Longevity Medicines

Fatima Zahra is a student of Quaid-e-Azam University Islamabad doing her BS Biochemistry. she is interested in reading suspense stories, creative writing, and also fond of cooking.