“There is no such thing as conscious artificial intelligence”! It was stated in a study published in Nature in October 2025. The authors Anderzj Porebski and Jakub Figura further argued that the association between consciousness and the computer algorithms used today (primarily large language models, LLMs) and those that would be invented in the foreseeable future is deeply flawed. We believe that these flawed associations arise from a lack of technical knowledge and the way several new technologies (especially LLMs) work, which can create the illusion of consciousness.

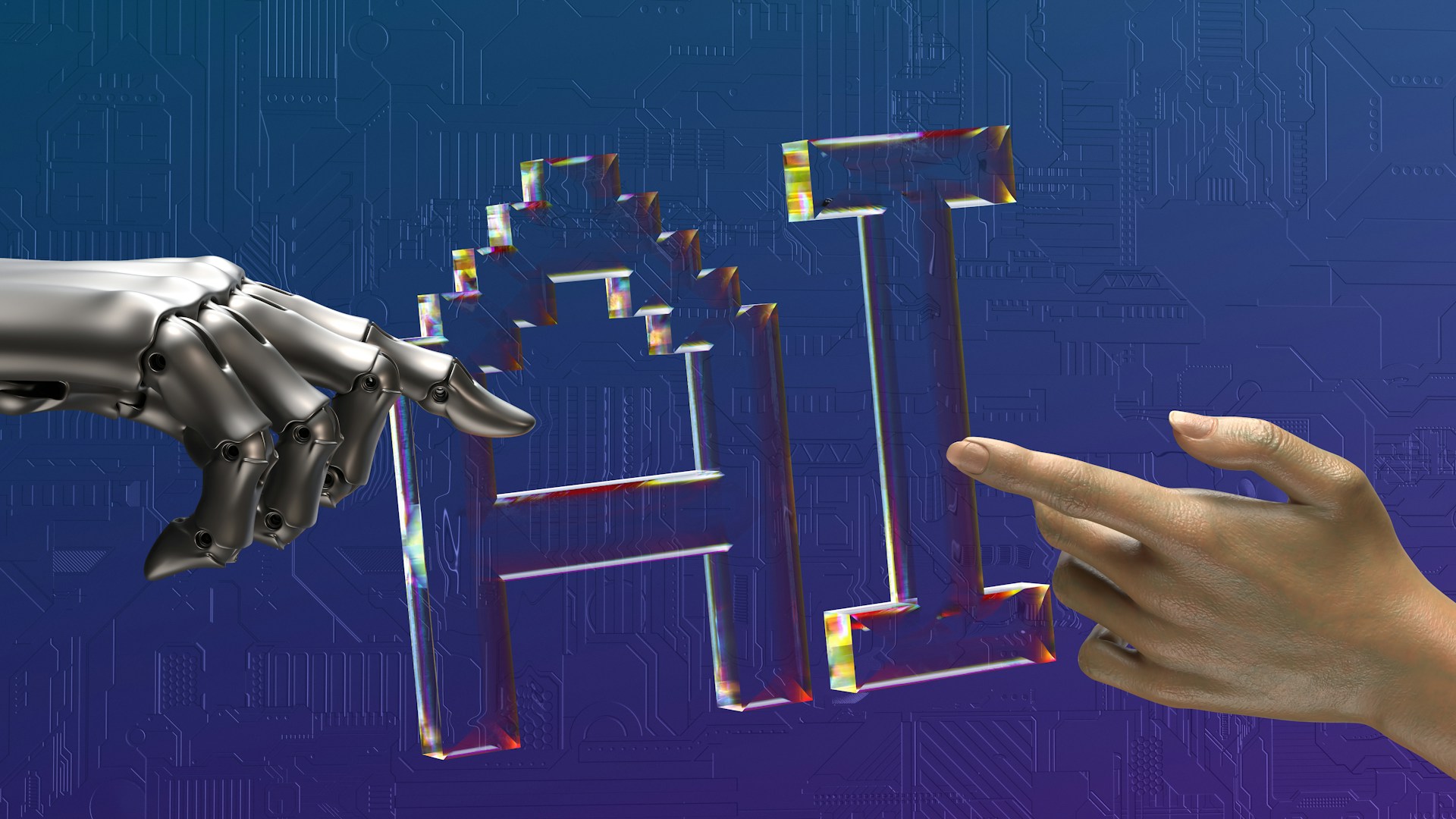

The modern world attributes considerable credit to Artificial Intelligence (AI) for transforming lives, owing to its wide range of applications and benefits. AI pertains to the design of machines that require human intelligence. The capability of AI to think like humans has been a long-established debate in philosophy, cognitive science, and computer science. The discussion has intensified with the advancements in AI, which demonstrate capacities in reasoning, problem-solving, and creativity. The main question is, can machines be enabled to attain human-like consciousness? Are their intelligence parameters fundamentally different?

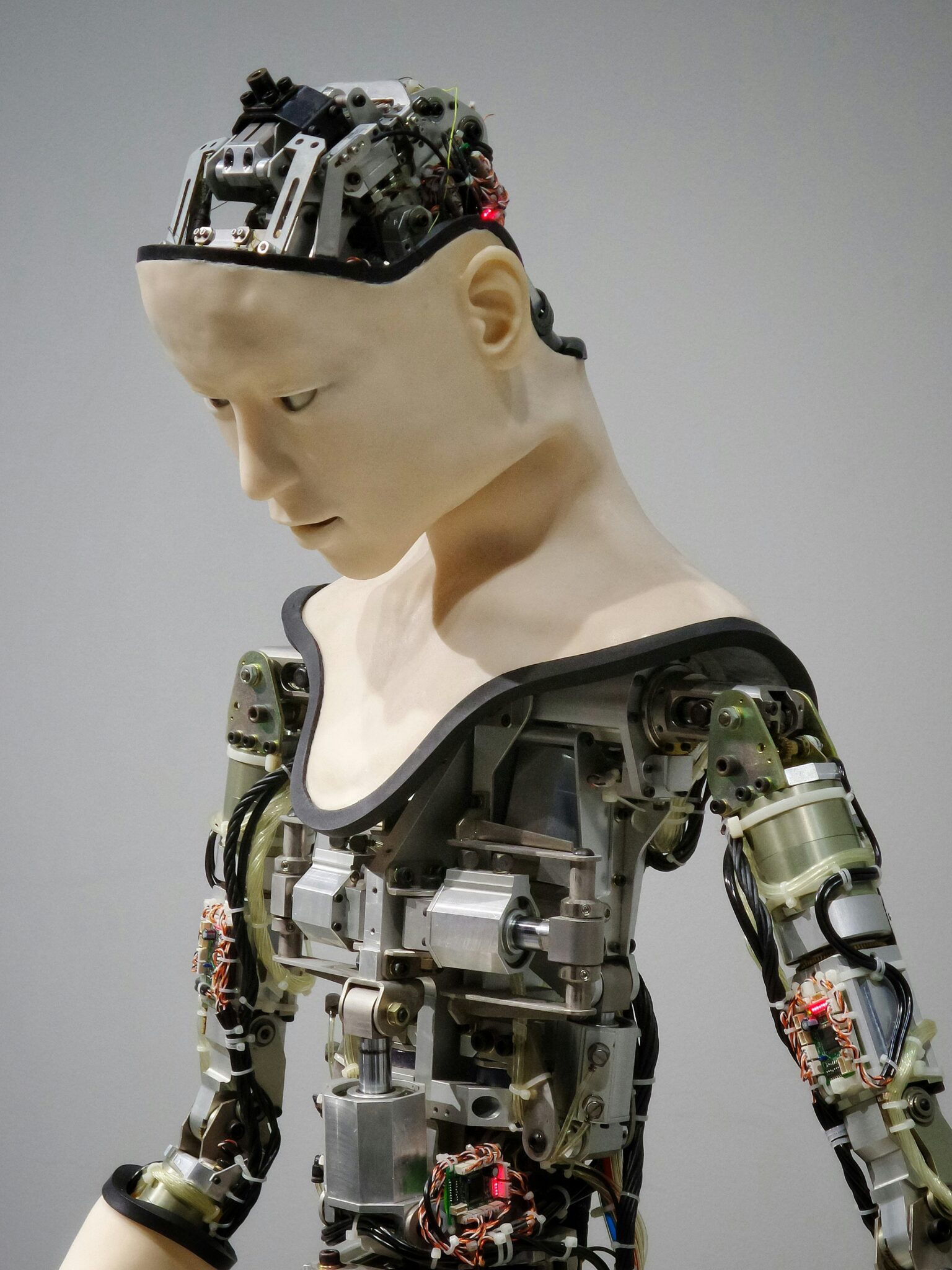

AI encompasses a broad range of technologies, including machine learning, natural language processing, robotics, and cognitive computing. AI is divided into two main categories, Narrow AI (Weak AI) and General AI (Strong AI). Narrow AI is meant for specific tasks only, like image recognition, translation, or playing chess. It does not have any general intelligence or self-awareness. On the other hand, Strong AI is enabled to carry out any intellectual task like humans, with reasoning, learning, and adapting across various domains. It is often linked to self-awareness and consciousness. Currently, all AI systems fall under the class of Narrow AI, exhibiting excellence in specialized tasks but lacking true understanding.

For a decade, we have noticed a remarkable advancement in AI. This has sparked interest in time-hallowed questions about AI. One question is about the ability of AI systems to be conscious. Consciousness is one of the most complex and debated topics in neuroscience and philosophy. It generally refers to the subjective experience of awareness, thoughts, emotions, and perceptions. Consciousness has several key attributes like self-awareness, intentionality, subjectivity, and qualia. One of the principal theoretical questions is whether consciousness is solely a consequence of brain processes or if it requires something beyond computation.

There is a need to discuss artificial consciousness and the missing element of consciousness in current robots and AI systems. Consciousness is a crucial, yet often overlooked, topic in modern debates on AI and robotics ethics. We see constant integration of machines into our lives without much discussion on whether they are, or have a chance to become, conscious. The machines are becoming more social and lifelike, and so is the need to critically reflect on the role consciousness has in moral and legal considerations.

Phenomenal consciousness is based on subjective experience, and access consciousness deals with information obtainable for reasoning and behaviour; there is more focus on it while discussing artificial intelligence. Drew McDermott presented a computational theory of consciousness, whereby a machine becomes conscious if it is modeled for experiencing things.

There are a couple of arguments on the subject of developing consciousness in AI systems. The human brain has far more complex architecture, biochemical diversity, and developmental trajectory than current AI systems, as human consciousness emerged through complex, multi-level development. AI exhibits access consciousness, the capacity to broadcast and utilize information, but lacks phenomenal consciousness, which is based on subjective experience.

The elements that are of prime importance in human-like consciousness are lacking in AI systems, such as emotions, embodiment, cultural development, and internal motivation. Spontaneous neural activity, healthy protein-based biochemistry and pharmacological modulation, evolutionary and developmental plasticity, embodiment, emotions, and evaluative capability are important brain features that are missing in AI and are difficult to incorporate.

Partial or alternative forms of consciousness, not necessarily a replica of human consciousness, might be attained. They may not be tagged as inferior or superior, but only that they may be qualitatively different. The type and level of consciousness aimed to be developed should be specified by AI researchers, with empirical biology as the basis of the work, not just abstract theory.

People tend to develop emotional bonds with social robots, and such interactions bring questions to light about whether robots deserve rights or moral status, as Sophia, a robot, was granted citizenship in Saudi Arabia. Modern robots still lack consciousness and sentience, so granting them human-like rights isn’t justified.

Consciousness in machines doesn’t mean mimicking human behavior. It is a false assumption that only human-like traits indicate consciousness. According to neuroscience, the brain is necessary but not sufficient for consciousness, as some people function with very little brain matter. There are certain criteria for machine consciousness: we must consider consciousness as real, acknowledge that other beings (human or non-human) may have it too, accept the possibility of it arising from physical matter, focus on building machines that support the processes consciousness needs, and ensure that consciousness is observable.

According to some researchers, it’s not necessary to focus on human-like consciousness, as AI is meant for enhancing human life, not replicating human minds. If we consider for a moment that AI systems gain consciousness equal to that of humans, then a couple of genuine concerns arise, such as rights and legal recognition, moral responsibility in case of any crime, and the question of human identity if machines begin to think and feel like humans.

Whereas traditional AI models operate on predefined rules, modern AI mimics neural networks, the inspiration for which is taken from the human brain. However, even the most advanced neural networks lack true understanding, as they cannot comprehend meaning like humans do, although they can recognize patterns.

Philosopher John Searle proposed a “Chinese Room” thought experiment that challenges the idea that AI can accurately comprehend language. He gave an example of a person locked in a room, receiving Chinese characters and responding using a rule book, without understanding Chinese. AI, like the person in the room, only processes symbols but doesn’t understand them. AI may simulate emotions, analyze patterns, and make predictions, but it does not sense emotions as humans do. It is daunting develop systems with gut feelings shaped by life experiences.

Consciousness studies can be conducted scientifically by employing empirical neuroscience. For that purpose, a rubric of indicator properties was proposed, obtained from the main theories of consciousness. Recurrent Processing Theory (RPT), which showed that consciousness requires feedback loops for sensory-perceptual systems. Global Workspace Theory (GWT), which considers consciousness to involve broadcasting information to various cognitive systems, such as focus, memory, and logic. Higher-Order Theories (HOT), which define consciousness as requiring recognition of one’s mental states. Attention Schema Theory (AST), which views consciousness as a model of attention for self-control, and Predictive Processing, which holds that consciousness is based on prediction and error rectification in perception.

Modern AI systems were evaluated for the exhibition of any of these features by adopting a theory-heavy approach, in which theories of consciousness were used to derive testable markers called “indicators.” The design and function of AI systems were compared to these markers for assessment. It was concluded that no current AI system is conscious, although hope remains that it may be possible in the future.

There are certain specific computational and architectural characteristics that AI would require to meet the standards of consciousness, such as algorithmic recurrence, global information broadcast, metacognitive monitoring, predictive modeling of attention, and embodiment and agency. In current AI systems like GPT, some of these indicator properties are present, but not the whole set.

Although current AI lacks consciousness, some theorists have high hopes that future AI may develop self-awareness through advanced neural networks and self-learning algorithms. AI systems might become philosophical zombies, acting as if they are conscious but lacking genuine subjective experience. Machines may develop consciousness through increasing complexity, such as integration with biological neurons or brain-like structures. This approach may also help bridge the gap between computation and true cognition.

The rapid progress of AI cannot be ignored, but achieving human-like consciousness remains an awaited goal. The basic nature and definition of self-awareness and consciousness are not well understood, making it quite challenging to replicate them in machines. The ability of AI to perceive and think like humans is both a technological and philosophical concern, and the questions associated with it will continue to evolve as our understanding of advancements in both AI and human cognition grows.

References

- Butlin, Patrick, et al. “Consciousness in artificial intelligence: insights from the science of consciousness.” arXiv preprint arXiv:2308.08708(2023).

- Anwar, Nur Aizaan, and Cosmin Badea. “Can a Machine be Conscious? Towards Universal Criteria for Machine Consciousness.” arXiv preprint arXiv:2404.15369(2024).

- Farisco, Michele, Kathinka Evers, and Jean-Pierre Changeux. “Is artificial consciousness achievable? Lessons from the human brain.” Neural Networks180 (2024): 106714.

- McDermott, Drew. “Artificial intelligence and consciousness.” The Cambridge Handbook of Consciousness (2007): 117-150.

- Hildt, Elisabeth. “Artificial intelligence: does consciousness matter?” Frontiers in psychology10 (2019): 1535.

- https://www.nature.com/articles/s41599-025-05868-8

More From The Author

Syeda Khair-ul-Bariyah has been associated with teaching since 2007. She is a synthetic organic chemist and a science and fiction writer. She has publications in both national and international journals in the field of Chemistry. Moreover, she is the author of a stage playbook, “Peregrination of the Soul,” and has a couple of articles and poems published on various websites.