Cyber-attacks are no longer science fiction; they are a stark reality threatening our digital way of life. Digitalization through technology is virtually taking hold of all growth ladders worldwide. Even in Pakistan, from remote jobs to Siemens’ controllers modulating electricity generation, Nadra NIC identification, cloud infrastructure, money transactions, etc.

Every organization in the professional world has to transfer their data to technology, giving access to all the confidential and vital information of every individual. This is how the growth of digitalization comes with an increment of risk of data breach by a third intruder known as a cyber-attack.

And this is how cybersecurity and digitalization go hand in hand.

“We apologise for the inappropriate messages sent through Bykea. We can confirm that this was a third-party communication tool which got compromised,” 1:21 AM. JUN 13, 2023_Bykea app.

Recent News of a security intrusion in the Bykea app has caused panic among its users. Such security issues, even when resolved, leave a lasting impression of mistrust among the people. As a result, many Pakistanis, including myself, have refrained from using the Bykea app for an extended period, taking extra precautions to avoid being hacked.

Consequently, Bykea experienced hindered progress in its operations, with a decline in its number of users and financial losses for the company. Not only apps like Bykea are vulnerable to third parties, but the economy, reliability, or government-related database services are also in great danger.

THE HISTORICAL OVERVIEW OF CYBER-ATTACKS

The intrusion in the Bykea app is not the first cyber-attack in history; the evolution of cyber-attacks began in the late 1960s. The very first attack was taken into account when Leonard Kleinrock, a renowned professor of UCLA on the mission of ARPANET (the world’s first network connection built on October 29, 1969), sent a message “LOGIN” to Stanford Research Institute in the essence of the first digital message sent in the history but to the astonishment the system crashed delivering only first two letters “LO”.

The second cyberattack was observed in the face of the first virus, CREEPER, created by Robert Thomas in 1970. The virus used to print a message stating “I’m the Creeper: catch me if you can” in the network. However, in 1971, its anti-virus REAPER was created. Cyber-attacks grew and grew larger; their roots expanded from an error message, invading the systems and stealing useful, confidential information in the war.

Cyber Attacks are mainly observed in three types. Criminal Cyber-attacks are primarily intended to steal financial assets by stealing data, disrupting business, manipulating confidential data or simply selling on the dark web for dark money. Whereas Political and Personal cyberattacks are more focused on seeking personal enmities or personal desires.

Whether or not cyber-attacks can be used relatively is a debated topic. Some argue that cyber-attacks can be used within ethical boundaries as a valuable tool for the defence system. At the same time, the potential for collateral damage and violation of privacy makes the word “fair” in the context of cyber-attacks challenging.

PRINCIPLES AND OBJECTIVES OF INFORMATION SECURITY

The important frameworks to understand the implementation of cybersecurity measures are mainly divided into 3 goals: CIA or, conversely, AIC.

- Confidentiality is the process of coding the data from one system to another. This way, if the data transferred from System A to B by any change gets hacked, the intruder cannot access the coded/ encrypted data until he has the key to decode/decrypt like System B. Hence, the data will be secured and confidential.

- Integrity: It refers to refraining from data breaches like in the case of a 1969 message sent by a professor at UCLA, where the “login” message was modified by a third party as “Lo”. It is the second main goal of cybersecurity to maintain the accuracy and trustworthiness of data.

- Availability: The availability module assures that the Data is available 24/7 to the concerned authority. Otherwise, the websites crash or heavy load on the server become a vulnerable threat to the security system as it is more feasible for attackers to attack heavy traffic on the networks; such types of attacks are known as DDoS attacks, where attackers can exploit the data regardless of the traffic level.

INSIGHTS BY CYBER SECURITY EXPERTS

I have collected a few insights from cyber security experts to get you an idea of how Cyber-Attacks are evolving drastically. Based on published reports by (the Department Of Homeland Security 2014, Sebastian Bortnik 2012, APWG 2013, An Osterman Research Paper 2015), and a conference held in the USA (Cybersecurity Standford, CA, 2014), It was recognized that clever cyber criminals might be capable of launching attacks that can harm the reliability, accessibility, or confidentiality of cyber services or government-related database services.

In addition to this, in 2014, it was informed by the APWG (Phishing Activity Trends Report) that confidential as well as essential data of Pakistan started to get hacked by accessing such websites. Another discussion by the dignitaries of the delegation states that about $445 billion is lost annually due to cyber-crimes in online security.

“Attack graphs provide a powerful visualization of potential attack paths, helping us understand complex security vulnerabilities in interconnected systems.” – Dorothy Denning, Cybersecurity Pioneer. (Source: Denning, D. “Graph-Based Intrusion Analysis.” Proceedings of the 2001 IEEE Symposium on Security and Privacy)

AN ESSENTIAL TOOL OF CYBERSECURITY: ATTACK GRAPHS

In today’s interconnected digital landscape, the interaction of complex systems or simple embedded systems and elements with the environment can sometimes become challenging to manage. This can lead to uncertain or unexpected system behaviours.

Such situations are often referred to as system execution or run. Sometimes, these executions lead to adverse effects known as failure scenarios. These failure scenarios then trespass specific correctness rules defined for the system. If the cause of the failure scenario is not a typical malfunction of the system but a foreign intrusion, it is an Attack Graph.

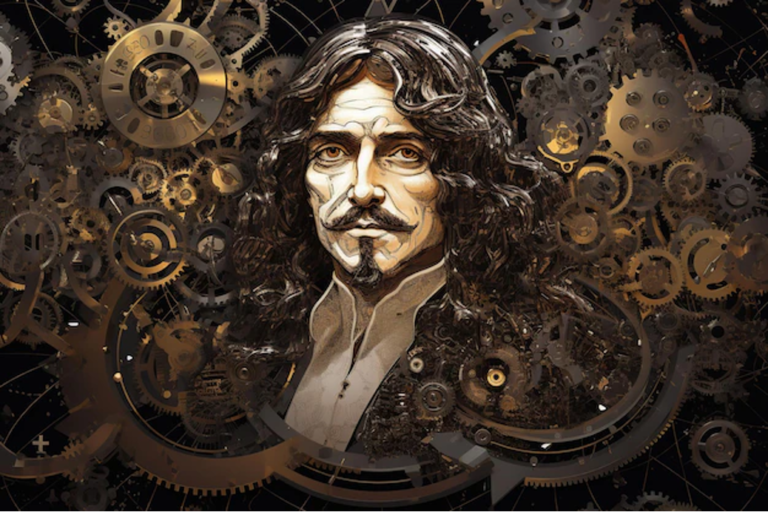

Under the surface, the attack graphs were initially known to be finite automata called state enumeration graphs in the early ages. After that, the definition was refined by AND/OR graphs, called exploit dependency, and later generalised as a particular case of Bayesian networks called Bayesian attack. Therefore, various definitions of attack graphs are cycling in today’s era, engulfing novices with difficulties in understanding this field of study.

The first-ever concept of an attack graph was put forth by Phillips and Swiler in 1998. An Attack Graph is a specific type of model used to specify the behaviour and potential problems in managing the computer systems. It discloses the vulnerable points in a system that could be exposed to the intruder. It holds a significant position as a crucial tool in cybersecurity, which unleashes the critical and vulnerable points in the system that might be exposed to hackers.

By mapping out vulnerabilities and identifying potential exploitations by third parties, security analysts can effectively defend against and address the most critical vulnerabilities in the system. This proactive approach helps safeguard organizations with large amounts of data from intrusion and misuse. Given the prevailing security issues in Pakistan, this subject holds great importance.

Understanding Attack Paths Through An Example

Think of an attack graph as a map showing how a hacker might move through different nodes, essentially machines or devices in a network, to exploit weaknesses and gain unauthorized access. To illustrate, let’s delve into an example involving three machines: A, B, and C.

Machines B and C play respectively as a web server (a system that delivers web content like websites) and database (which stores and manages the data). The network’s firewall allows HTTP and SSH requests from machines A to B. HTTP (HyperText Transfer Protocol) and SSH (Secure Shell) help to connect the client’s request to the server. Normally, a user on machine A will make an HTTP request on machine B, which will then go through the database on machine C.

However, if the direct access from machine A to C is blocked by the firewall, any SSH request from machine A to C will be considered blocked. This indicates an attacker’s intrusion who successfully launched a command injection attack on the web server (machine B), gaining a foothold and later further exploiting a vulnerability in the database server in machine C; he will perform a SQL injection attack (Structured Query Language) which involves accessing and manipulating the data through coding. In this way, the intruder will gain access to the restricted data.

To Conclude the example, if an attacker compromises a web server (machine B) and then exploits a database server (machine C), they could gain access to restricted data.

An Overview of the Operation Of An Attack Graph

The reason behind categorizing an Attack Graph as a Graph is generally because it encloses the relationship between two entities, like any other graph, through interconnected nodes.

Creating attack graphs involves:

- Identifying potential attack paths: This initial step involves identifying all the possible ways an attacker can avail throughout the network.

- Analyzing vulnerabilities: In the second phase, any possible entry point that could attract the intruder is firmly studied and examined.

- Establishing attack templates: Through attack vectors and tactics, attack templates are created to enhance the clarity of each node by managing data in an organized way.

- Constructing the graph itself: Lastly, a graph is created with nodes and edges specifying the network and vulnerable points, respectively.

Various other tools can be used to generate these graphs. Once created, they assess network vulnerabilities, predict attack paths, and identify weak points that need reinforcement.

CHALLENGES FACED BY ATTACK GRAPHS

Attack Graphs can be challenging to handle on a larger scale. Attack graphs can become large and complex in an extensive network, making them difficult to manage. Also, dynamic changes in networks and devices make traditional analysis methods less effective.

Researchers use intelligent agents and algorithms to streamline graph creation and analysis to address these issues. One promising approach involves simplifying attack graphs using the A* prune algorithm. Moreover, modern techniques like the Random Forest algorithm help predict and identify attack locations, improving the effectiveness of the cyber defence.

In the context of IoT (Internet of Things) technology, where various devices are interconnected, challenges arise due to diverse devices, rapid changes, and specific communication protocols. Attack graphs need to consider these factors to model vulnerabilities accurately.

TO CONCLUDE ON THE ESSENCE OF CYBER CRIMES IN PAKISTAN

Pakistan faces a shortage of skilled security analysts. Despite a considerable number of doctors, teachers, writers, and business professionals, the field of cybersecurity lacks expertise. According to FIA (Federal Investigation Agency), Pakistan can bear such attacks due to the lack of cybersecurity expertise. Raising awareness about cyber threats and tools like attack graphs in cybersecurity is essential for securing our nation.

Attack graphs are handy because they highlight how vulnerabilities spread. When one node is compromised, nearby nodes become susceptible, forming a chain reaction. By analyzing these graphs, security experts can pinpoint areas that need strengthening and design strategies to mitigate potential threats.

Currently, there is a lack of awareness among many people in Pakistan regarding these vital aspects of cybersecurity. If Pakistan invests in nurturing security analysts and cybersecurity professionals, it can significantly enhance the protection of its prominent organizations.

Looking to the future, as technology advances and threats evolve, understanding and using attack graphs becomes vital. Organizations can proactively defend their systems by predicting and analyzing potential attack paths. As researchers continue to refine and simplify these techniques, they enable a more secure digital future.

References:

- https://www.sciencedirect.com/science/article/abs/pii/S0167404822004734#:~:text=An%20attack%20graph%20is%20a,these%20terms%20more%20precisely%20later.

- https://www.researchgate.net/publication/285463275_Use_of_Attack_Graphs_in_Security_Systems#:~:text=One%20prominent%20methodology%20involves%20constructing,for%20both%20detection%20and%20repair.

- https://www.researchgate.net/figure/Example-of-attack-graph_fig2_235979981

- https://kijoms.uokerbala.edu.iq/home/vol8/iss3/3/

- https://www.hindawi.com/journals/scn/2019/2031063/

- https://100.ucla.edu/timeline/the-internets-first-message-sent-from-ucla

- https://xmcyber.com/glossary/what-are-attack-graphs/

- https://www.sciencedirect.com/science/article/abs/pii/S0167404822004734#:~:text=those%20risk%20analyses.-

- https://studymoose.com/cyber-security-challenges-in-pakistan-essay

Also, Read: Dr. Abro speaks on the role of Cyberspace in the National Security